H.R. 4801: Unleashing AI Innovation in Financial Services Act

The "Unleashing AI Innovation in Financial Services Act" proposes the establishment of AI Innovation Labs by financial regulatory agencies, allowing companies in the financial sector to experiment with artificial intelligence (AI) technologies under a more flexible regulatory environment. Here are the main components of the bill:

Purpose of AI Innovation Labs

The primary goal of the AI Innovation Labs is to enable regulated financial entities to test AI applications in their operations without facing the usual regulatory burdens. Such labs are designed to foster innovation by offering a space for experimentation with AI test projects.

Definitions

Key terms in the bill include:

- AI test project: A financial product or service that makes significant use of AI and is subject to federal regulation.

- Financial regulatory agency: A government body that oversees financial entities, including the Securities and Exchange Commission (SEC) and the Federal Reserve.

- Regulated entity: Any company or organization that falls under the authority of a financial regulatory agency.

Application Process

To access the AI Innovation Labs, regulated entities must submit applications to the appropriate regulatory agency. As part of their applications, they need to provide:

- A detailed description of the AI project.

- Proposals for any regulatory waivers or modifications needed.

- An alternative compliance strategy that outlines how they will meet regulatory requirements differently.

- Evidence that the project serves public interest and does not pose systemic risks.

- A well-defined timetable and business plan for the project.

Agency Review and Approval

Once applications are submitted, the regulatory agency has 120 days to review them. If the application meets the necessary standards, the agency will approve it and impose any relevant conditions or limitations. If an application is denied, the agency must provide justification for the decision, and the entity may address the feedback and resubmit the application.

Data Security and Reporting

All data shared by the companies during the testing process must be handled securely according to established data protection standards. Furthermore, financial regulatory agencies are required to report annually on the outcomes of AI test projects for a period of seven years, focusing on insights rather than disclosing specific entity information.

Enforcement Provisions

The legislation also emphasizes that despite the relaxed regulations for AI test projects, regulatory agencies retain the authority to take action against entities for fraudulent activities or other unsafe practices related to AI implementations.

Relevant Companies

- GS (Goldman Sachs): Could use the AI Innovation Labs for developing algorithmic trading solutions.

- JPM (JPMorgan Chase): Might explore AI capabilities in fraud detection or customer service automation.

- MGNI (Magnite): May leverage AI for optimizing advertising solutions within financial services.

- BLK (BlackRock): Likely to engage in AI-driven risk assessment tools for asset management.

This is an AI-generated summary of the bill text. There may be mistakes.

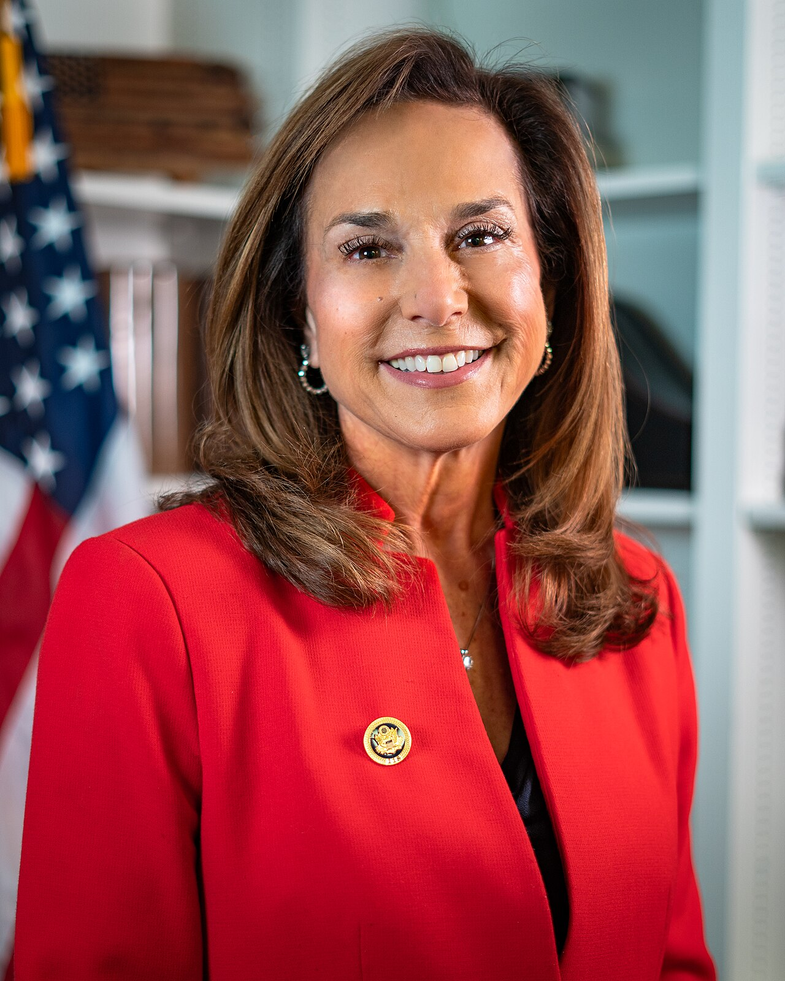

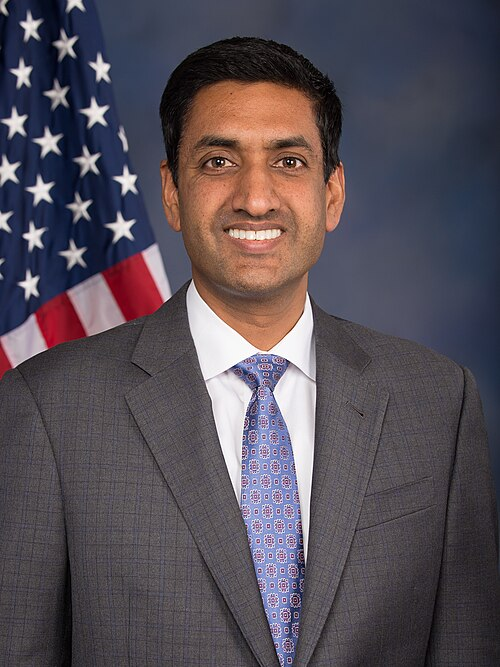

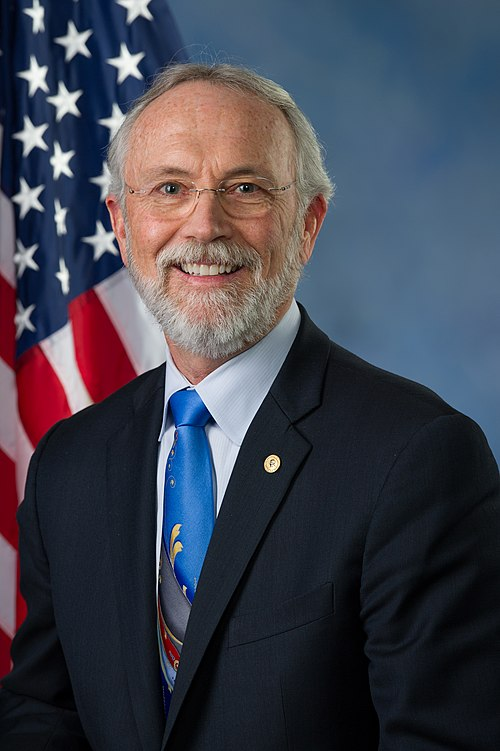

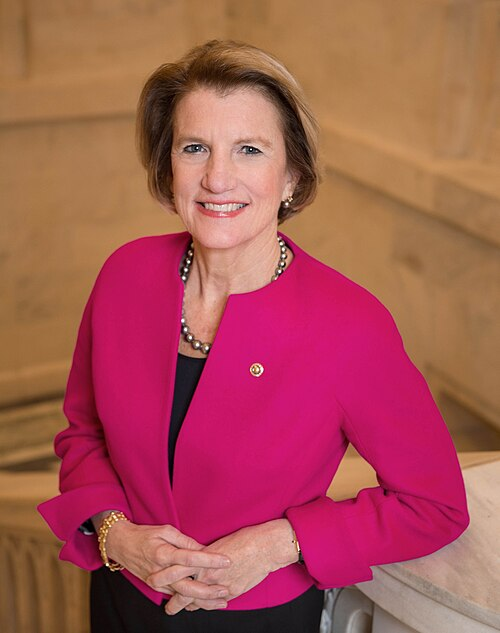

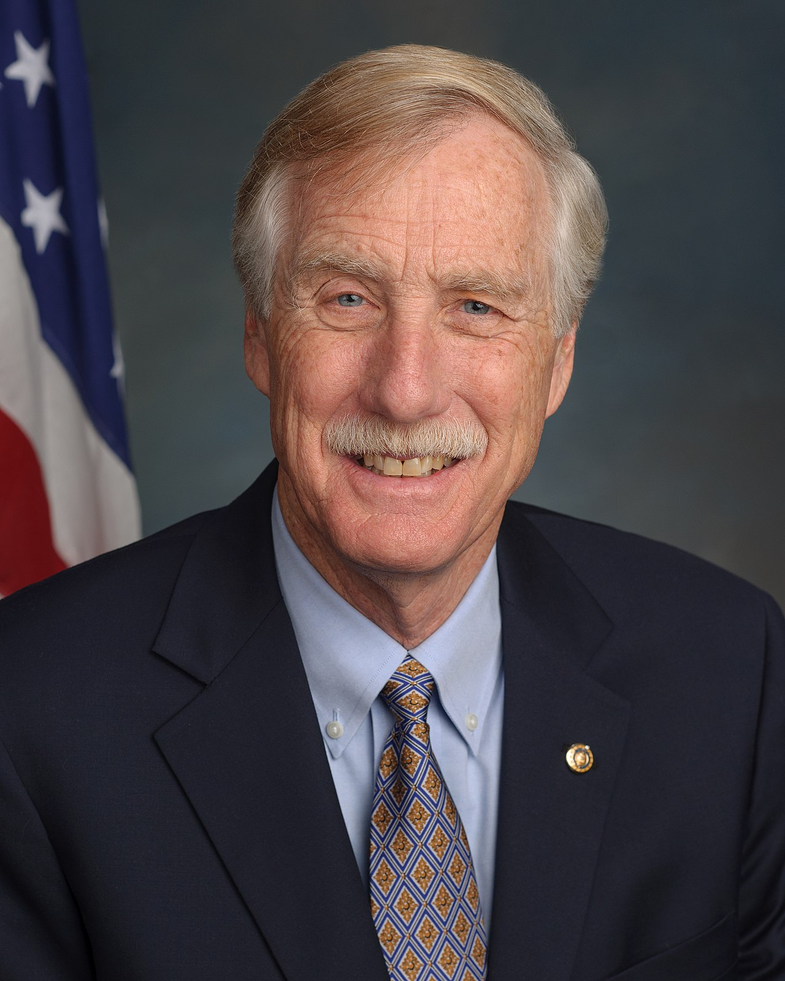

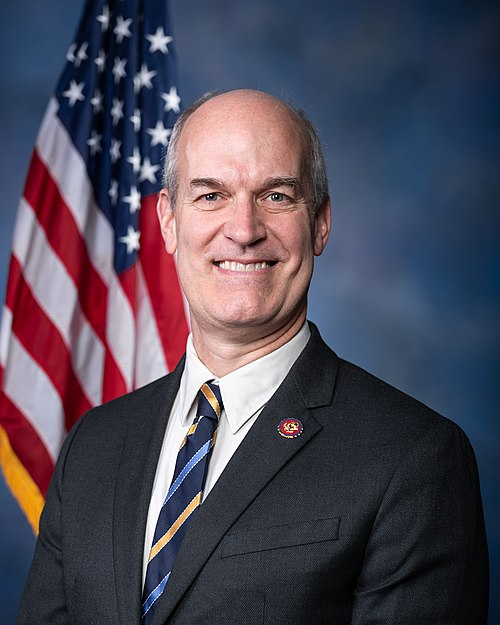

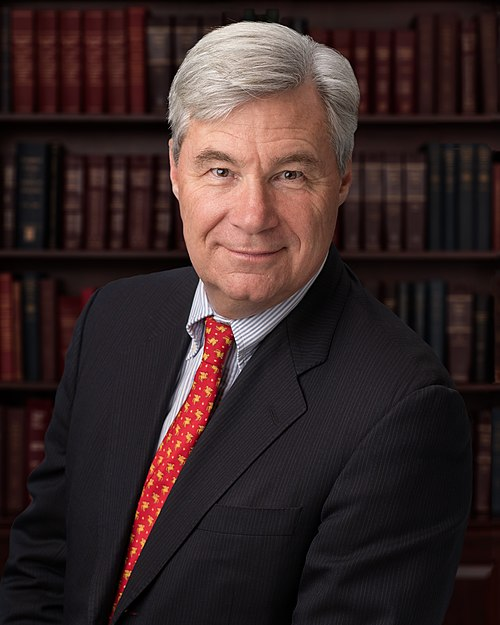

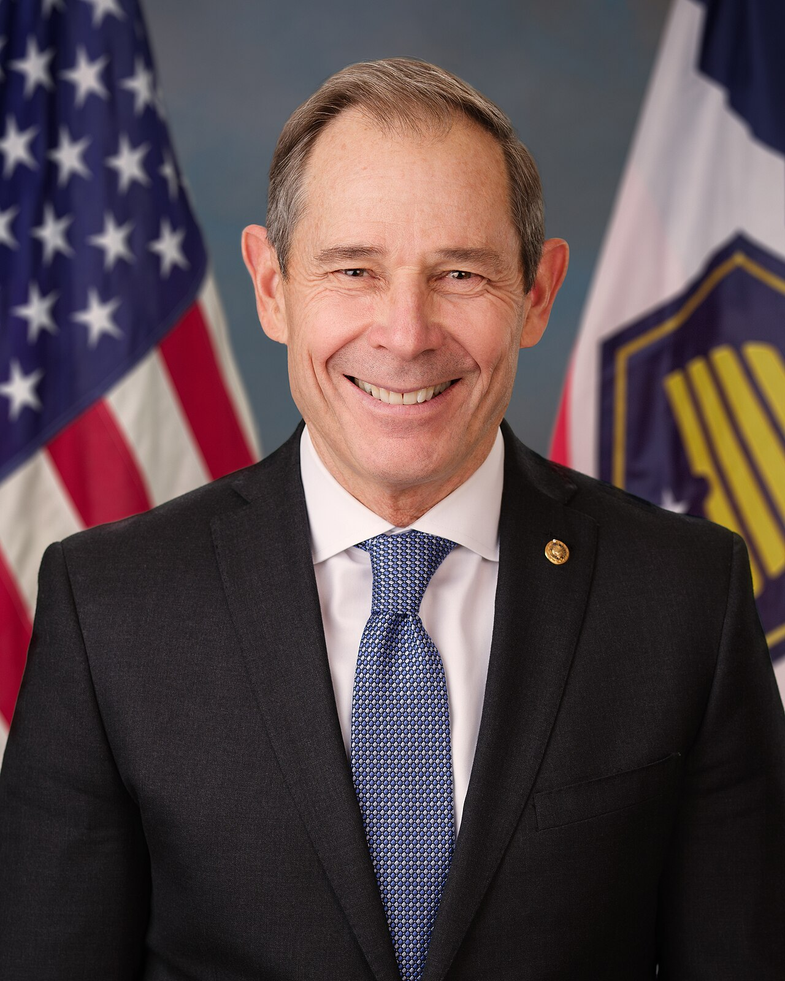

Sponsors

4 bill sponsors

Actions

2 actions

| Date | Action |

|---|---|

| Jul. 29, 2025 | Introduced in House |

| Jul. 29, 2025 | Referred to the House Committee on Financial Services. |

Corporate Lobbying

0 companies lobbying

None found.

* Note that there can be significant delays in lobbying disclosures, and our data may be incomplete.